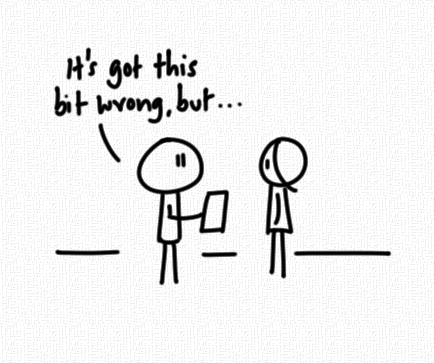

If you’ve ever presented to a decision maker you know what happens if they spot something’s off.

If one thing is wrong, the whole thing is wrong.

Go back and check it again.

I’ve recently been on the giving and receiving end of AI generated material.

For example, I ran some company reports through a couple of tools I’d built.

These tools used different approaches to analyse the content of a document and give me a report.

Both reports had issues, things that I could spot immediately.

I now had two choices – take the report to someone else and point out that there were some errors.

Or review the source information, and cross check what had been produced?

What would you do?

Well, when I was given information that was wrong recently – or more accurately – clearly hadn’t been reviewed, I didn’t go ahead with the deal.

I think we need to figure out where AI sits in workflows – and I’m starting to believe it’s not a solution.

It’s not something that’s going to replace all your people – although you might stop hiring for certain roles.

It’s a tool.

What you produce is better or worse depending on how you use it, and how much you put into validating what comes out of it.