There’s a model that I first came across ten years ago – that helped me make sense of the ten years before that.

And I now think it’s a helpful tool for anyone looking to position themselves for the next ten years by figuring out what kind of operation you’re running.

This is what I learned.

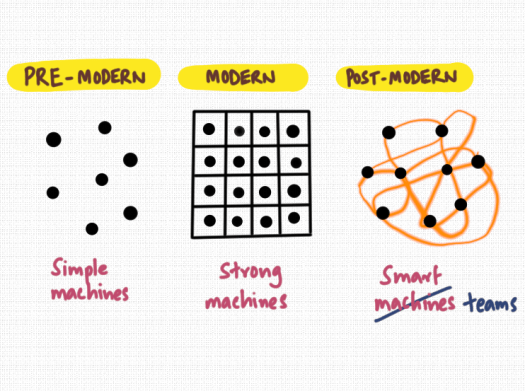

Once upon a time, there were butchers, bakers and candlestick makers.

In this pre-modern world you didn’t need to know about flour to operate a forge, or wax to make a loaf.

Individual professionals did their own thing with simple machines – hands, heat, hammers – and coexisted.

Then we had the industrial revolution and a step change in the way we made things.

Capital was deployed into factories and the modern world was born.

It was a world of strong machines, with workers that served the machines. The workers were ordered, structured, placed. They were interchangeable, replaceable, pieces on a board to be positioned and played by management.

Our modern hierarchy, command and control style operating structure comes from this world.

And then, sometime in the last century, the post-modern world came into existence.

This was a world of smart machines. An information age. Of networks and connections. Where the links between things mattered as much as the things themselves.

And there are obvious differences.

If I have a hammer and I give it to you, now you have a hammer and I have nothing.

If I know how to do something and I teach it to you, we both know this thing. I lose nothing.

So, what kind of business do you operate? Are you a lone genius that does your own thing? Do you have a job in a corporation? Or are you part of a network?

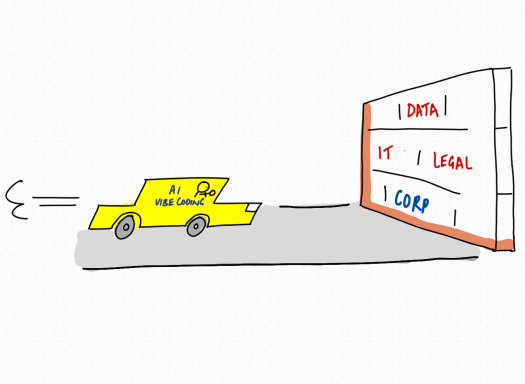

Knowing this gets even more important in this age of AI.

Now, the smart machines are everywhere. Anyone can have them.

Many people still think that they have to use modern methods to build organisations – using techniques to control and motivate people that are at least a hundred years old.

The observant ones will notice that it’s now about teams – small groups of people that want to work with each other and use smart machines to supercharge what they do.

What kind of operation makes this possible? Who’s doing this already? What does great look like?

That’s the change that’s coming. Ready or not.