It used to take us 18 months to get a new client.

Anyone that works with large companies has experienced this.

From the time you get an introduction to when a proposal is signed it’s easily over a year.

It was anyway.

We usually travelled to meet the client.

That always felt a little unsustainable – burning fuel to find out how you could help someone use less fuel.

The sense that this was an important meeting always put some pressure on everyone attending, especially those responsible for sales.

You had spent time and money to get here, and the next 45 minutes were crucial to going ahead.

And that’s where things often fell apart.

The problem with a sales meeting or a demo is that we feel that it’s our one chance to tell the clients about everything it is that we do.

So we spent the first 80% of the meeting talking about ourselves – our backgrounds, our product, the problems we solve.

Then we spent the next 20% of the meeting asking for feedback about what the client thought of us.

By the end of the meeting we had no idea what the client really wanted – because we hadn’t given them a chance to tell us.

So we doubled down, sending out huge proposals that set out everything we did again, hoping that something would land.

Unsurprisingly, that didn’t work either.

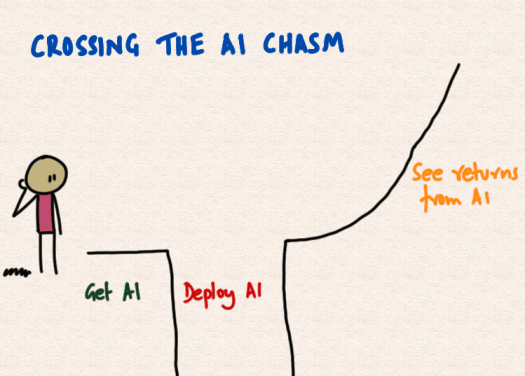

Eventually we learned how to do it better.

We had to talk less and listen more.

When we did that, we learned more about their situation and what they needed from someone – perhaps us if we were the right fit.

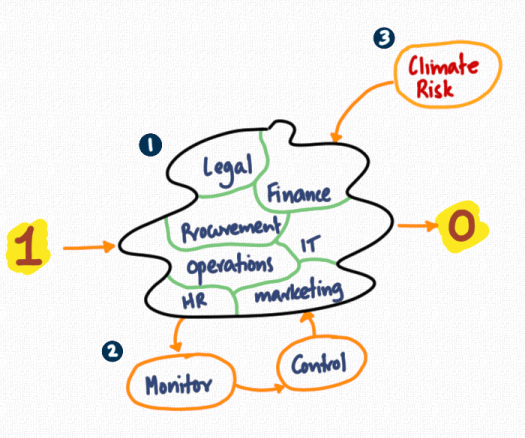

We built a process around this approach – one where we can figure out if we want to work together in a couple of hours – focusing on what matters to our client instead of wildly pitching everything we think we can do.

And it’s real simple.

It starts with a conversation rather than trying to sell something.